Qeexo AutoML enables machine learning application developers to customize inference settings based on their use-case. These parameters are critical for achieving the best live performance of models on the embedded target. In this article, we will discuss the two parameters associated with the inference settings; instance length and classification interval.

Instance Length

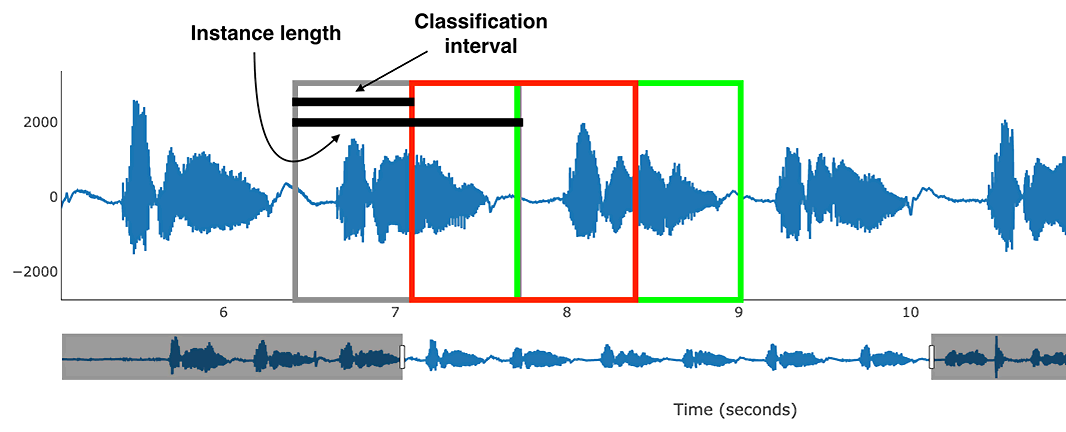

Instance length is the time period over which to make one prediction using raw sensor data. It is measured in milliseconds. According to the selected sensors and their ODRs, this time is then converted to the number of raw sensor data samples. These samples are used for computing features for training of ML models and also during on-device inference. If only one sensor is considered for the application, instance length is converted from milliseconds to number of samples using that sensor’s corresponding ODR. If there are multiple sensors with different ODRs, however, this conversion takes into consideration the sensor with the highest ODR. For other sensors, the number of samples is determined proportionally. Below are some examples for the Arduino sensor board with instance length of 500 milliseconds (0.5 seconds).

Setting 1: Microphone with ODR of 16000Hz.

Setting 2: Accelerometer and Gyroscope with 952Hz and microphone with 16000Hz.

For microphone,

For accelerometer and gyroscope,

How to Determine the Instance Length

Long instance length corresponds to a larger number of samples for featurization. According to Fourier Transform basic principles, more data points could yield finer frequency resolution, which captures an increased quantity of information from the signals. Therefore, it produces a greater number of features for the ML model training.

However, given the total time length, a long instance length would reduce the training dataset size. For example, if a signal of length L seconds is given and we divide that into segments of T seconds each, we get more segments if T is smaller and fewer if T is larger. For on-device live testing, larger T also implies more data needs to be collected at once to form a single prediction. Due to memory constraints of embedded devices, there will be limitations on the maximum instance length. Too small of an instance length can sometimes result in numerical instability of signal processing algorithms and may not capture sufficient discriminative information from the signals. For these reasons, AutoML restricts the minimum signal length to at least 64 samples.

Consider the following example for the microphone sensor (16000Hz) on Arduino. The instance length supported is at minimum 64 samples and at most 12000 samples. In milliseconds, this represents a range from 4 milliseconds to 750 milliseconds, as calculated here:

If multiple sensors (accelerometer & gyroscope; 952Hz ODR) are chosen, the range then becomes 4 to 1075 milliseconds.

Selecting the Best Instance Length

Qeexo AutoML supports automatically determining the instance length or setting it manually. The “Determine Automatically” option takes the minimum and maximum permissible values of instance length and finds the optimal value within this range. The optimization process tries to maximize the classification performance. It should be kept in mind for efficient model training that the optimization process takes longer to train models than manual selection.

Manual selection constrains the mininum and maximum permissible values. Any value within this range can be chosen for building the models. One way to estimate an instance length manually is visualizing the signal. As a general guideline, choose an instance length that is neither too short to miss part of the signal, nor too long that it could include unnecessary noise over multiple instances.

Instance length is a common parameter across all of the models, i.e., an instance length determined automatically or manually is applicable across all of the models.

Classification Interval (CI)

Classification interval refers to the time interval in milliseconds between any two classifications when live streaming sensor signals as illustrated in Fig. 3. It is a user defined parameter and accepts a value between 100 milliseconds (10 classifications in 1 second) and 3600 seconds (1 classification every 1 hour). Classification interval is not optimized even when selecting the “Determine Automatically” option.

Shorter intervals make predictions more frequent, but consume more power, while longer intervals save power, but can miss quick-burst live-streaming events when they occur between two consecutive classifications.

The detailed description of the Classification Interval is in this blog post.